After nearly two weeks of announcements, OpenAI capped off its 12 Days of OpenAI livestream series with a preview of its next-generation frontier model. “Out of respect for friends at Telefónica (owner of the O2 cellular network in Europe), and in the grand tradition of OpenAI being really, truly bad at names, it’s called o3,” OpenAI CEO Sam Altman told those watching the announcement on YouTube.

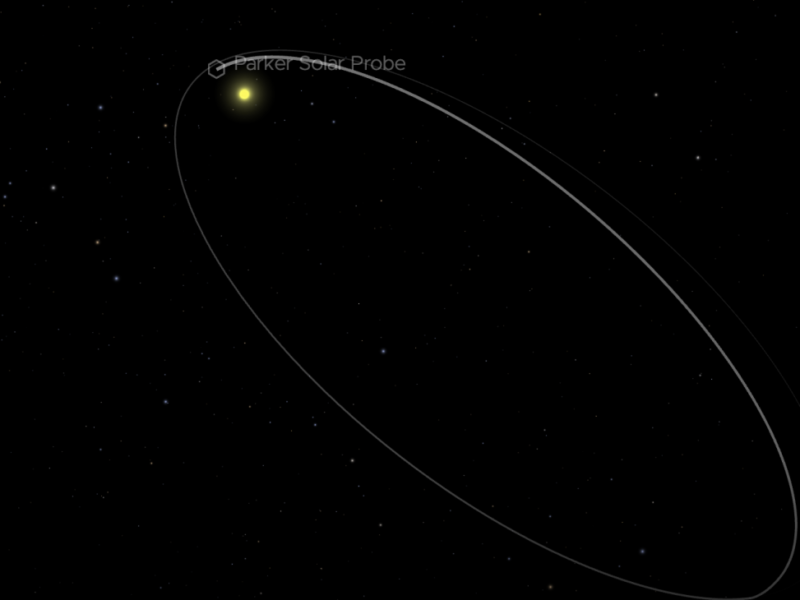

The new model isn’t ready for public use just yet. Instead, OpenAI is first making o3 available to researchers who want help with safety testing. OpenAI also announced the existence of o3-mini. Altman said the company plans to launch that model “around the end of January,” with o3 following “shortly after that.”

As you might expect, o3 offers improved performance over its predecessor, but just how much better it is than o1 is the headline feature here. For example, when put through this year’s American Invitational Mathematics Examination, o3 achieved an accuracy score of 96.7 percent. By contrast, o1 earned a more modest 83.3 percent rating. “What this signifies is that o3 often misses just one question,” said Mark Chen, senior vice president of research at OpenAI. In fact, o3 did so well on the usual suite of benchmarks OpenAI puts its models through that the company had to find more challenging tests to benchmark it against.

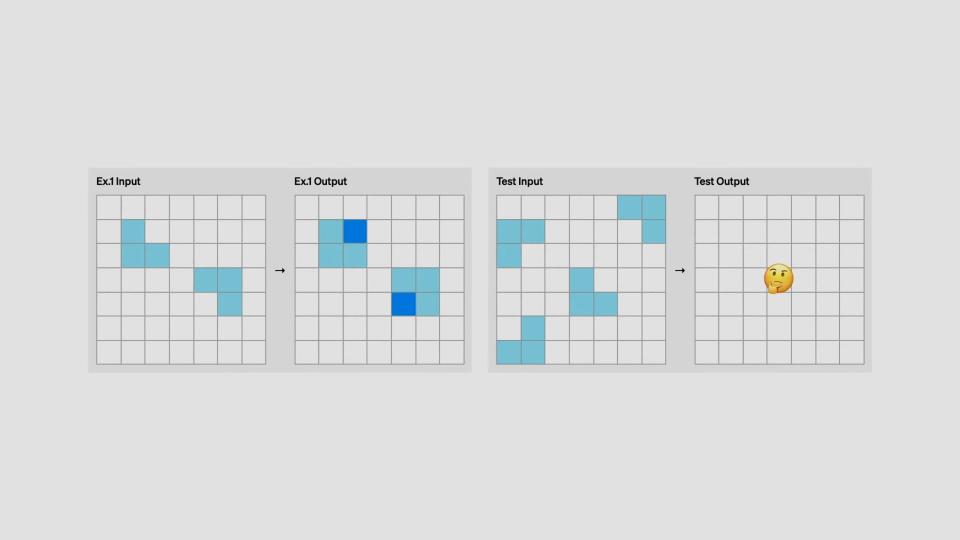

One of those is ARC-AGI, a benchmark that tests an AI algorithm’s ability to intuite and learn on the spot. According to the test’s creator, the non-profit ARC Prize, an AI system that could successfully beat ARC-AGI would represent “an important milestone toward artificial general intelligence.” Since its debut in 2019, no AI model has beaten ARC-AGI. The test consists of input-output questions that most people can figure out intuitively. For instance, in the example above, the correct answer would be to create squares out of the four polyominos using dark blue blocks.

On its low-compute setting, o3 scored 75.7 percent on the test. With additional processing power, the model achieved a rating of 87.5 percent. “Human performance is comparable at 85 percent threshold, so being above this is a major milestone,” according to Greg Kamradt, president of ARC Prize Foundation.

OpenAI also showed off o3-mini. The new model uses OpenAI’s recently announced Adaptive Thinking Time API to offer three different reasoning modes: Low, Medium and High. In practice, this allows users to adjust how long the software “thinks” about a problem before delivering an answer. As you can see from the above graph, o3-mini can achieve results comparable to OpenAI’s current o1 reasoning model, but at a fraction of the compute cost. As mentioned, o3-mini will arrive for public use ahead of o3.

[ad_2]

Source link